The Parallel Mechanisms Information Center

(

http://www.parallemic.org)

|

ParalleMIC The Parallel Mechanisms Information Center ( http://www.parallemic.org) |

|

It's mid-summer 2009, and lists of newly-released Impact Factors (IFs) for 2008 are beginning to circulate. The IEEE Transactions on Robotics (TRO), for which I am completing my five-year term as associate editor, has boosted its IF by more than 30%, but has slipped to fourth place in the robotics category. Transactions of the Canadian Society for Mechanical Engineering (TCSME), for which I am currently the managing editor, still has an outrageously low IF. And the publisher of Mechanisms and Machine Theory has now placed a large banner on the journal's home page boasting its (presumably high) IF. Although I should finally finish this article that I've been incubating for months, I wonder if I will ever be entirely satisfied with it, despite the loads of articles I have read on the subject, the ardent discussions I have had with colleagues, and the literally hundreds of modifications I made to it as a result.

Don't tell me you don't care about a journal's IF when you are deciding where to submit your article. Hopefully, it's not your number one criterion, but unfortunately, when you apply for a grant, most external reviewers will take into consideration the "prestige" (incorrectly measured by the IF) of the journals where your articles were published. Worse yet, many administrators misuse the IF [1,2], though there has been gradual improvement in this regard thanks to work led by Jean-Pierre Merlet [3], for example, or carried out by the International Mathematical Union [4]. And librarians, of course, partially base their journal subscription decisions on IFs. So, even if you simply want your article to be read by a wide audience (and not just accessible to it), then publishing in a "highly rated" (another unfortunate synonym of "highly cited") journal is presumably better. As long as you are not obsessed with IFs and you understand the measure well, it is no sin to take it into consideration to some extent (especially when you are unfamiliar with a journal). It is not right, however, to abuse the IF by mentioning it all over your CV, because, while there might occasionally be some correlation between the IF and the overall quality of a journal, judging the quality of a particular article based on the IF of the journal in which it appears is much more unreliable.

Articles on parallel robots are published in a wide variety of journals, but I can readily spot eight where most of them appear. While I will inevitably "rank" those eight journals by presenting various bibliometric data associated with them, this is by no means an attempt to directly compare them. Indeed, while most of the journals studied can be compared in this way, some of them address a very particular audience. So, this article should not be treated as a ranking of journals where articles on parallel robots can be published, but purely as an attempt to educate readers on the whole issue of citation analysis and IFs.

Here is the list of eight journals that we analyzed for this feature article.

All these journals have existed for more than two decades. Some have changed their names, but not during the past five years. Table 1 shows the total number of articles published in these eight journals by four well-known authors during their careers (note that these authors have published many other articles in these journals, but not on parallel robots), although these figures are not intended to justify my choice of the eight journals. Table 1 also shows the respective numbers of articles on parallel robots published in 2008, both in absolute terms and as a percentage of the total number of articles in that journal. We can presume that the JMD and MMT publish by far the most articles on parallel robots. However, judging from the first three issues of the recent spin-off of the JMD, the Journal of Mechanisms and Robotics, the latter will certainly outdo its parent in the field of parallel robots.

| TRO | IJRR | JMD | MMT | RCIM | AR | JIRS | Ro | |

| J.-P. Merlet | 2 | 6 | 3 | 5 | 0 | 1 | 1 | 1 |

| C.M. Gosselin | 13 | 11 | 27 | 19 | 0 | 0 | 0 | 1 |

| V. Parenti-Castelli | 1 | 2 | 15 | 1 | 0 | 0 | 0 | 1 |

| M. Shoham | 5 | 4 | 3 | 4 | 2 | 0 | 0 | 1 |

| J. Angeles | 4 | 1 | 6 | 2 | 1 | 1 | 0 | 1 |

| Articles on parallel robots in 2008 |

2 (1%) |

4 (5%) |

20 (13%) |

28 (26%) |

3 (4%) |

5 (6%) |

3 (5%) |

11 (16%) |

Table 1: Articles on parallel robots in the eight journals studied.

The IF is a (very controversial) measure used to evaluate journals and, unfortunately, scientists [1,2,4]. It was introduced by the Institute for Scientific Information (later absorbed by Thomson Reuters) several decades ago and can be found on an annual basis in the Journal Citation Reports (JCR), a Thomson Reuters product to which our library subscribes. Each year's IFs are released in the middle of the following year.

Throughout this period, the IF for a given year Y for a given journal has been computed as the ratio of the number of times articles published in this journal in years Y-1 and Y-2 were cited (in articles in selected journals or conference proceedings) during year Y, to the total number of "citable items" published in years Y-1 and Y-2.

There are dozens of editorials, articles, and reports criticizing the IF and pinpointing its numerous pitfalls (see [1-7] for a recent sample), though the inventor of the IF, Dr. Eugene Garfield, has a plausible response to every objection [8]. We will not discuss all these shortcomings in detail here, but it is important to list a few of the technical issues that are particularly relevant to our sample of journals.

There are also fundamental flaws that are much more difficult to study. We can argue, for example, that citing an article is not necessarily a sign of appraisal of that article, or that many articles are cited by authors who have never even read them [4].

Finally, there are various legitimate ways to increase the IF of a journal. The most common are to publish the journal as frequently as possible, or to post an article awaiting issue assignment online, as soon as it is typeset. Only AR does not offer this service, which puts it at a slight disadvantage.

As already stressed, the coverage used for obtaining the data for the JCR 2008 IF edition is still far from complete (though what does "complete" mean?). Furthermore, there are inevitable occasional errors, since the procedure involves scanning, OCR scanning, and source, title, and publication year recognition, in reference entries that follow various bibliographical styles and are often incomplete or tainted with typos (particularly those in conference articles). However, there is no better citation database currently available, with the probable exception of Elsevier's Scopus. Therefore, we have no choice but to rely on Thomson Reuters' data and occasionally validate them using the Scopus database. Would the results of our analyses have been significantly different if the JCR data were perfect? I doubt it.

Most of the analyses were relatively simple to do — the values required were readily available in the JCR. Others called for the use of Thomson Reuters' Web of Science database to output raw data that were further manipulated. Finally, Elsevier's Scopus database, which is much larger, was also used to validate some of the JCR data. I didn't use Google Scholar, since, although it provides much larger coverage, the latter is inconsistent and largely unknown. For example, my numerous attempts to make Google Scholar index the articles of TCSME that are now available online were fruitless, but the database promptly indexed my TCSME article when I posted it (illegally...) on my personal web site. In fact, I can include any pseudo article in Google Scholar simply by posting it on my web site (here is the proof)! Therefore, if we are to rely on Google Scholar, a herculean effort will be required to clean up the results. There is free software, called Publish or Perish [11], which does some parsing, but it does not remove citations that come from doubtful sources. Extensive manual checking is therefore absolutely necessary.

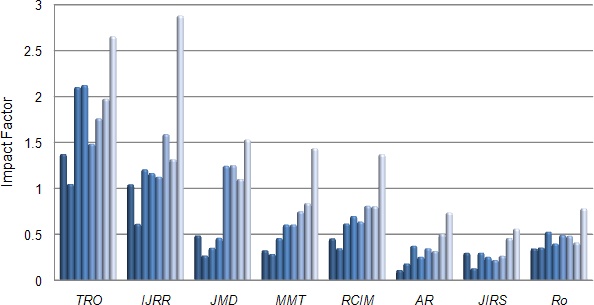

Table 2 and Figure 1 show the trends in IFs for the eight journals studied, from 2005 to 2008, and from 2001 (dark blue bars) to 2008 (light blue bars) respectively. The obvious trend towards increasing IF values can be explained mainly by constantly increasing coverage by Thomson Reuters and by improved citation consideration algorithms.

Now, how do we explain the fact that the IF of the IEEE Robotics and Automation Magazine (not covered in this study) suddenly jumped from 0.892 (2007) to 3.000 (2008)? Did the quality of the magazine suddenly tripled? The answer is simple. First, the JCR's 2008 coverage is much larger. For example, there is a big difference between the magazine's JCR 2007 IF and that measured using Scopus data, but the JCR 2008 IF and that measured using Scopus are almost the same. Second, three of the 2006 IEEE RA Magazine articles contributed to a third of the 2008 IF (the so-called "blockbuster" effect).

Such major fluctuations clearly indicate that the current IF of a journal is much less meaningful than the IF trend over several years. Fortunately, this trend is shown in the JCR.

| Year | TRO | IJRR | JMD | MMT | RCIM | AR | JIRS | Ro |

| 2005 | 1.486 | 1.127 | 1.245 | 0.607 | 0.638 | 0.348 | 0.219 | 0.492 |

| 2006 | 1.763 | 1.591 | 1.252 | 0.750 | 0.810 | 0.318 | 0.265 | 0.483 |

| 2007 | 1.976 | 1.318 | 1.103 | 0.839 | 0.804 | 0.504 | 0.459 | 0.410 |

| 2008 | 2.656 | 2.882 | 1.532 | 1.437 | 1.371 | 0.737 | 0.560 | 0.781 |

Table 2: Latest Impact factors for the eight journals studied (source: data directly available from current and past editions of the JCR).

![]() Figure 1: Trend of impact factors from 2001 to 2008 (source: data directly available from current and past editions of the JCR).

Figure 1: Trend of impact factors from 2001 to 2008 (source: data directly available from current and past editions of the JCR).

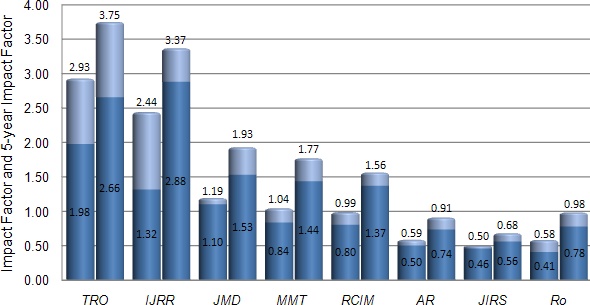

One of the major criticisms of the traditional IF is that it considers a very short time period – two years. In response, Thomson Reuters introduced the so-called five-year IF in January 2009, which, as its name suggests, takes into account the average of citations over a five-year period. Figure 2 shows the five-year IFs superimposed over the traditional IFs (in dark blue) for the eight journals studied: for 2007 (left bar in each pair) and 2008 (right bar in each pair). Not surprisingly, the five-year IF is larger than the two-year traditional one. However, except for TRO and the IJRR, the increase is very slight. So it seems that the traditional two-year period is sufficiently representative for the eight journals studied, at least for now.

![]() Figure 2: Traditional impact factors (in dark blue) and five-year impact factors (number over the light blue bars) for 2007 and 2008 (source: data directly available from current and past editions of the JCR).

Figure 2: Traditional impact factors (in dark blue) and five-year impact factors (number over the light blue bars) for 2007 and 2008 (source: data directly available from current and past editions of the JCR).

One of the frequently criticized aspects of the JCR is that its data cannot be validated with precision [9,10]. Indeed, while the Web of Science database presumably used for populating the JCR is publicly available, its algorithm for finding citations is not the same — probably the only difference being that some manual corrections are made for the JCR. These corrections may refer, for example, to citations with incomplete or slightly incorrect journal titles.

It is possible to perform a very detailed manual inspection of the IF for a given journal, since the JCR indicates the number of articles published from a particular source (journal or conference proceedings) in year Y, citing an article published in that journal in year Y-1, Y-2, etc. However, such a process would take days to complete for journals like TRO or the IJRR, since their IFs are built on citations from several hundred different sources. Besides, dozens of these sources are not even disclosed in the JCR (but rather combined into a single "all others" item).

We first tried to validate the JCR's 2008 IFs directly, using the "Create Citation Report" function in Web of Science. While the number of citable items for each of the eight journals is exactly the same as the one indicated in the JCR, the number of citations varies. Table 3 shows the JCR's 2008 IFs and the constructed 2008 IFs based on Web of Science data. We can only speculate on the reasons for the discrepancies between the two sources, but the only certain conclusion is that the JCR's IFs are prone to error.

This seems perfectly normal, of course, since authors can easily misspell, mistype, or wrongly abbreviate a journal's name. Indeed, journals with simple names (such as Robotica) show fewer discrepancies than journals with complex names that are often abbreviated incorrectly (such as Robotics and Computer-Integrated Manufacturing).

We also tried to validate the JCR's 2008 IFs using the "Citation Tracker" function in Scopus. The number of citable items for each of the eight journals is almost always the same as the one indicated in the JCR, but the number of citations varies. Table 3 shows the 2008 IFs constructed on the basis of Scopus data.

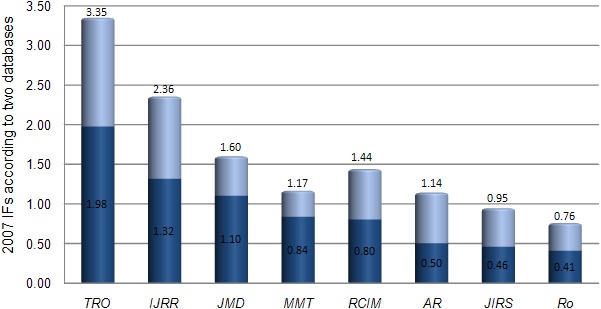

Finally, we performed the same study in Scopus for the JCR's 2007 IFs. The results are shown in Fig. 3, where the JCR's 2007 IFs are the bars in dark blue, while the 2007 IFs based on Scopus are the bars in light blue (only the increase is visible).

The conclusions of this validation are fairly obvious. First, the 2008 edition of the JCR covers a much larger spectrum of sources than the 2007 edition (journals and conference proceedings), now almost as large as Scopus. Second, journals with a high IF are surprisingly well handled in the 2008 edition of the JCR, in contrast to journals with a low IF.

| TRO | IJRR | JMD | MMT | RCIM | AR | JIRS | Ro | |

| JCR IF | 2.656 | 2.882 | 1.532 | 1.437 | 1.371 | 0.737 | 0.560 | 0.781 |

| WoS IF | 2.693 (+1.4%) |

3.015 (+4.6%) |

1.532 (0.0%) |

1.503 (+4.6%) |

1.121 (-18.2%) |

0.941 (+27.7%) |

0.768 (+37.1%) |

0.753 (-3.6%) |

| Scopus IF | 2.822 (+6.3%) |

3.059 (+6.1%) |

1.492 (-2.6%) |

1.599 (+11.3%) |

1.734 (+26.5%) |

1.00 (+35.7%) |

0.798 (+42.5%) |

0.993 (+27.1%) |

Table 3: JCR's 2008 IFs and constructed 2008 IFs based on Web of Science and Scopus.

![]() Figure 3: 2007 JCR's IFs and constructed 2007 IFs based on Scopus data.

Figure 3: 2007 JCR's IFs and constructed 2007 IFs based on Scopus data.

One flaw of the IF measure is that it is an average, rather than a median, yet the citation distribution is far from normal (Gaussian). In other words, a journal that publishes a few review articles or a couple of blockbuster articles can significantly influence its IF. Using the "Create Citation Report" function in Web of Science, we analyzed the number of times each article published in 2006-2007 in each of the eight journals studied was cited in 2008 by source items covered by Web of Science. Unfortunately, as already mentioned, calculations performed using these data do not lead to the same citation numbers as reported in the JCR (differences are often considerable). Therefore, we can't say that we know exactly how many articles published in a given journal in 2006-2007 have contributed to the 2008 IF of that journal.

According to Web of Science, the articles published in 2006-2007 in the eight journals studied were cited between 0 and 22 times in 2008, according to what resembles an exponential distribution. Only two of the heavily cited articles were review articles (in MMT and the JMD).

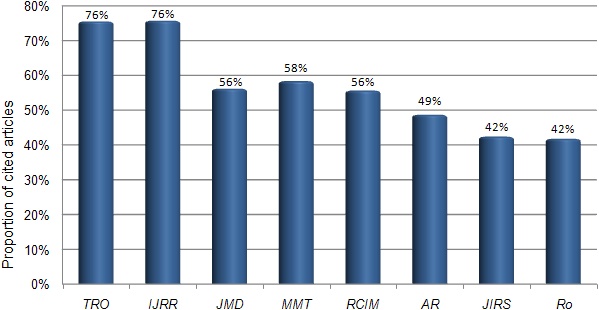

Figure 4 shows the percentage of articles published in 2006-2007 in the eight journals studied that were cited at least once in 2008 by a source item covered by Web of Science. Once again, the actual numbers are probably slightly higher. Figure 4 clearly shows that a larger proportion of articles were cited in high-IF journals than in low-IF journals. However, if we look closely at the raw data, we see that high-IF journals like TRO and the IJRR published many articles in 2006-2007 that were cited 22, 17, 15, 14, 10, ..., times in 2008, while low-IF journals like the JIRS and Ro published only a couple of articles in 2006-2007 that were cited more than 3 times.

It is also interesting to note that about one-third of Ro, JIRS, and AR 2005 articles have not been cited up to now, compared to only 4% of TRO 2005 articles and 13% of IJRR 2005 articles. Indeed, as we saw in Fig. 2, none of the eight journals studied can state that, if the IFs were measured over a longer period, their relative visibility would have improved substantially.

![]() Figure 4: Proportion of articles published in 2006-2007 and cited in 2008 (source: our own analyses based on Web of Science).

Figure 4: Proportion of articles published in 2006-2007 and cited in 2008 (source: our own analyses based on Web of Science).

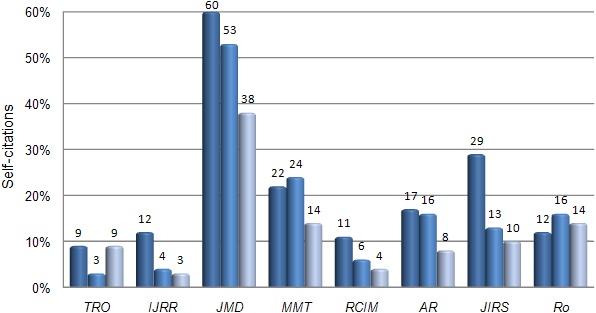

There is no problem with the articles in a given journal citing other articles of that same journal, and in fact, for most journals, these so-called journal self-citations provide only a small contribution to the IF (under 20%). On the contrary, the fact that a journal has very few self-citations might be interpreted to mean that the authors who publish their articles in that journal do not find other articles in that journal interesting enough (so why, then, would they publish in that particular journal?). However, when the editor of a journal encourages the authors of accepted articles to "seriously review recently published papers" in that same journal (as the editor of a journal not covered in this study recently asked me to do), this is a gray area. Fortunately, journal self-citations are clearly identified in the JCR, and it is clear from Fig. 5 which of the eight journals studied has been self-citing. This means that, had Prof. Mike McCarthy not been following this practice, the IF of the JMD would have been much lower. Yet, there is no doubt that the JMD is one of the top journals in our field.

![]() Figure 5: Proportion of journal self-citations (i.e., reduction of IFs when journal self-citations are excluded) from 2006 to 2008 (source: data directly available from the JCR).

Figure 5: Proportion of journal self-citations (i.e., reduction of IFs when journal self-citations are excluded) from 2006 to 2008 (source: data directly available from the JCR).

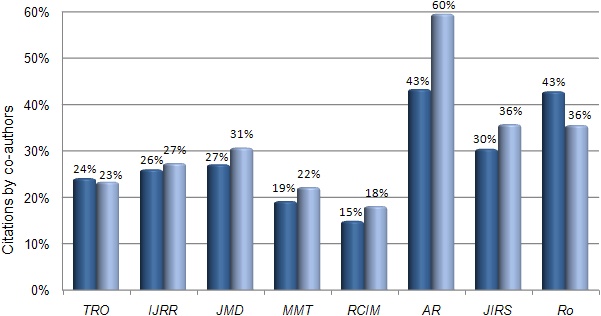

It could be debated whether or not journal self-cites should contribute to the IF of a journal, though Thomson Reuters clearly states that they should not have a dominant influence on the total level of citation. However, we should all agree that the measure of the impact or visibility of a journal should not take into account citations by co-authors, i.e., authors citing their own articles [12].

Unfortunately, Web of Science does not allow to display how many times an article has been cited by articles published in a given year and sharing no author with the article in question. However, we have tried to measure this phenomenon using the Scopus database in the same manner in which we used it to obtain the data in the last row of Table 3, but this time selecting the option, "Exclude from citation overview: Self-citations of all authors." The results show the percentage of the constructed IFs calculated by us using Scopus data that corresponds to citations by co-authors and should therefore be excluded.

It can be seen from Fig. 6 that the three journals with the lowest IF rely heavily on citations by co-authors. It is also interesting to note that the data shown in Fig. 6 do not have any correlation with the number of articles in each journal.

The fact that self-citations by co-authors are not excluded is clearly one of biggest flaws in the calculation of the JCR's IFs, and I hope that the JCR will at least start to provide this bibliometric measure in the same manner that it reports journal self-citations.

![]() Figure 6: Proportion of citations by co-authors in 2007 to articles published in 2005-2006 (dark blue bars) and in 2008 to articles published in 2006-2007 (source: our own analyses based on Scopus data).

Figure 6: Proportion of citations by co-authors in 2007 to articles published in 2005-2006 (dark blue bars) and in 2008 to articles published in 2006-2007 (source: our own analyses based on Scopus data).

Each of the journals studied draws citations from more than a hundred sources. However, some sources contribute particularly well to the IF of some journals, because these sources happen to be often affiliated with conference proceedings that are published very promptly, unlike journals. For example, according the JCR, about 23% of the 2008 IFs of both TRO and the IJRR were contributed by ICRA'08 and IROS'08 alone. Similarly, about 23% of the 2008 IF of the JMD comprises contributions by ASME conferences. Of course, this is completely legitimate.

Usually, when the number of published articles is low (the denominator in the IF), the IF tends to be higher. This is probably because journals with fewer articles are in a better position to choose the articles they accept. Their editors can also more easily manipulate the IFs, e.g., by publishing one or two review articles. However, for the eight journals analyzed in this study, we can clearly see that there is no direct correlation between number of articles and the IF (Table 4).

It is often argued that organizing special issues is a way to drive up the IF of a journal. Although we did not perform a detailed study on this factor, a quick verification revealed that this is not the case for the eight journals studied. For example, Issue 5 of TRO 2007 was a special issue on human–robot interaction and contributed to just 4% of the 2008 IF of TRO, while its articles accounted for about 8% of the total number of articles published in 2006-2007.

It seems logical that a journal that is widely available — not to mention freely available — will have a relatively high IF. Table 4 shows that this is not necessarily true. For example, Ro is relatively widely available (at least in the USA), yet it has a low IF. In contrast, the open-access International Journal of Advanced Robotic Systems, which is not even indexed in the JCR, has a constructed 2008 IF (using Scopus) of 0.863, which is almost as good as the constructed 2008 IF of Ro...

It seems logical, though, that wide availability certainly helps, but is not a guarantee of a high IF. Indeed, we usually browse new issues of only our favourite (high-IF?) journals. We come across all the other articles that we potentially decide to cite when they are referred to in an article that we read (but it would be too late for our citation to influence an IF) or when we conduct a keyword search using a database such as Compendex, Web of Science, Scopus, or Google Scholar.

| TRO | IJRR | JMD | MMT | RCIM | AR | JIRS | Ro | |

| US libraries subscribed (according to WorldCat) |

326 | 254 | 281 | 161 | 118 | 51 | 180 | 235 |

| Instit. subscr. rate / num. articles per year |

$7.5* | $27 | $4.5** | $39 | $27 | $47 | $39 | $14.5 | For-profit ($) or non-profit |

non-$ | $ | non-$ | $ | $ | $ | $ | non-$ |

| Number of articles in 2008 |

137 | 76 | 150 | 107 | 77 | 81 | 63 | 67 |

* relies on a voluntary charge of $75 per page

** relies on a voluntary charge of $110 per page

Table 4: Circulation, number of articles, and subscription prices per article.

Now that we have a better understanding of how the IFs of the eight journals studied are composed, let's not forget that publishing is normally a business, and the higher the IF of a journal, the stronger the justification for higher subscription rates. Indeed, as two economists recently put it in an open letter to universities, "The prices set by profit-maximizing publishers are determined not by costs, but by what the market will bear" [13].

Now, take the IJRR with its 254 institutional subscriptions in the USA alone. Since most libraries subscribing to this journal do not benefit from a bundle package or from consortium pricing and pay the full price of $1,600 annually (for the electronic version alone), an annual revenue of more than $400K is generated for SAGE Publications! The journal publishes about 1,400 pages annually. A professional typesetting and copy editing service costs no more than $70 per page (at least here in Canada), which constitutes a total annual expense of only $100K. Conversion into XML, DOI deposit with CrossRef, and so on, accounts for no more than $20K for a journal of this size. And all this is certainly a gross overestimate, since most commercial publishers outsource these labor-intensive services to China or India (we are charged $13 per page for TCSME by the typesetter of Nature). Finally, a Web-based peer-review and online submission system such as Manuscript Central for a journal of this size costs about $8K annually. This leaves the publisher with a profit of more than $270K from the USA alone...

A similar simplistic analysis for Robotica leaves its publisher, Cambridge University Press, with an annual "profit" of at least $200K, from the USA alone. Considering the fact that the official circulation of this journal is 600, the "profit" can easily be double that. Yet, Cambridge University Press is a non-profit organization. Where does all that money go? It's difficult to tell from their 2008 annual repport.

Since many journals have become outrageously expensive for institutions (think of MMT, for example), many scientists believe that the future lies in open-access publishing. However, there are various business models for open access. For example, in the most common type, the financial burden is simply shifted onto researchers in the form of processing fees. Indeed, open-access publishers such as IN-TECH and Hindawi (who are the only two to own open-access robotics journals, though Hindawi's Journal of Robotics is a flop) are for-profit businesses. Their main goal is to continuously increase their profit. For these publishers, the only way to do so is to increase the number of journals (but the demand is already saturated), to increase the number of articles in their journals (but the supply is limited), or simply to start increasing their compulsory page charges. Indeed, while both open-access robotics journals currently charge very small introductory fees (about $300 per article), more established journals charge as much as $975 per article! Indeed, I can't image how IN-TECH makes any substantial profit by publishing at 20 Euros per page, out of a revenue of 5000 Euros (or a bit more) annually, for their International Journal of Advanced Robotic Systems. Only the editorial assistant of TCSME charges us more than that annually.

Therefore, if you are angry that your article will make its publisher richer, I would suggest that you publish in non-profit journals like IEEE Transactions or ASME Transactions and pay at least a part of the voluntary page charges (although I find the IEEE's charge of $110 and the ASME's charge of $75 per page unjustifiable). At least you will be supporting scientific organizations and not commercial enterprises. The problem, though, is that these huge organisations are not transparent.

I had hoped this study would reveal some great secrets about the world of publishing, but it has not. We did, nevertheless, make some observations as a result of conducting it. Of course, they apply mainly to the eight journals considered.

The study also to some extent confirms that the JCR can be used to compare journals in the same field. However, only substantial differences in the IFs (some say at least 25%), over several consecutive years, should be considered as meaningful, because of important variations in an IF due to statistical noise (recall the situation with the IEEE Robotics and Automation Magazine). In that sense, neither the IEEE Transactions on Robotics or the International Journal of Robotics Research can lay exclusive claim to the title of "top journal in robotics," but they are both clearly more "prestigious" than Advanced Robotics or Robotica. Nevertheless, I do not intend to drop my individual $300 annual subscription to the latter, but will surely not recommend to my library that they pay $2,511 annually (!!!) for Advanced Robotics.

The study also illustrated that, even in the most often cited journals, there are many articles that are seldom or never cited. Therefore, the IF should in no case be used to blindly evaluate (using formulas) individual articles or researchers.

Finally, I urge authors to consider the following simple guidelines:

I hope that this study revealed that the impact factor, or even detailed citation analyses such as this one, should not be taken too seriously. Judging the quality of a journal merely from the number of citations it attracts is almost as reliable as judging the quality of this web site from the aggregate number of visitors. As one editor wrote in 1998, "for many scientists [the release of the latest impact factors] is no more than light entertainment, the scientific equivalent of tabloid gossip" [14]. I wish that you too will now join that group of scientists.

As I already mentioned, Thomson Reuters has a continuously evolving undisclosed list of possible deviations and abbreviations for every journal name, and their automatic algorithm for assigning citations take into account only the source name and the publication year in each reference of an article in a source covered by Web of Science (WoS). Thus, the citation data for journals with complex names is very prone to errors.

For example, until recently, "ASME J. Mech. Des." was not recognized as a synonym of "Journal of Mechanical Design." Therefore, neither of the older citations to articles in JMD are given credit in WoS, while they are recognized in Scopus.

It is important to understand two things. First, new citations to "ASME J. Mech. Des." are now credited to JMD. This means that the IF of JMD is now computed with greater precision (it actually fits that computed with Scopus), but it used to be underestimated before. Second, the problem with older citations was not (and will certainly never be entirely) corrected. This means, that you cannot rely on WoS for counting the exact number of citations (by sourced covered in WoS) to your older JMD articles.

This type of problem is not unique to JMD. As I already mentioned, TRO had similar problems. In the same way WoS used to recognize citations to "ASME J. Mech. Des." as to "ASME" only (you can see this using the "Cited Reference Search" in WoS), the staff of Thomson Reuters reported that there were more than a hundred citations to "IEEE T."

Therefore, the citation data of WoS will always be unreliable (unless each journal asks Thomson Reuters to change their journal's data as in the case of TRO), particularly for older citations.

| Copyright © 2000– by Ilian Bonev | Published on: August 25, 2009 |